Overview

Many organizations have chosen OpenStack as the platform for their cloud deployment. OpenStack is composed of a couple dozen independent project components (nova, keystone, cinder, etc) that work together to provide a robust and versatile cloud platform. The OpenStack component that provides block storage to the platform is the Cinder project. In this article I’ll cover the details required to use QuantaStor as a storage backend for OpenStack Cinder.

Before I get started, for those not familiar with all the technologies we’re going to go over in this article today I’ll start with a brief synopsis of each to get everyone up to speed.

OpenStack is…

a free, open-source platform for deploying Infrastructure as a Service (IaaS) in both public and private clouds, providing users with virtual servers and other resources through a web-based dashboard, command-line tools, or RESTful web services. It was initially developed in 2010 as a joint project between Rackspace Hosting and NASA. OpenStack consists of interrelated components that manage diverse, multi-vendor hardware pools of processing, storage, and networking resources throughout a data center.

QuantaStor is…

an enterprise-grade Software Defined Storage platform that turns standard servers into multi-protocol, scale-out storage appliances and delivers file, block, and object storage. OSNexus has made managing enterprise-grade, performant scale-out storage (Ceph-based) and scale-up storage (ZFS-based) extremely simple in QuantaStor, and able to be done quickly from anywhere. The differentiating Storage Grid technology makes storage management a breeze.

At a Glance

QuantaStor can provide block storage to OpenStack in two different ways; Scale-Out Block Storage using Ceph RBD and Scale-Up Block Storage using ZFS. I’ll go over the details you’ll need to configure OpenStack Cinder to use both technologies. I won’t be covering the details required to set up QuantaStor, OpenStack or Cinder, but expect all three to be installed and ready to go.

Cinder Configuration with Scale-Up Block Storage (ZFS-based)

OSNexus has developed a custom OpenStack Cinder driver to allow it to use QuantaStor Scale-Up Block Storage (ZFS-based) volumes. Let’s start with an overview of the pieces required to be implemented:

- Determine the pool ID for the QuantaStor Scale-Up Block Storage pool

- Install the QuantaStor Cinder driver to the Cinder node(s)

- Configure the cinder.conf file to use the driver

- Restart services

- Verify the loading of the driver

Determine the Pool ID

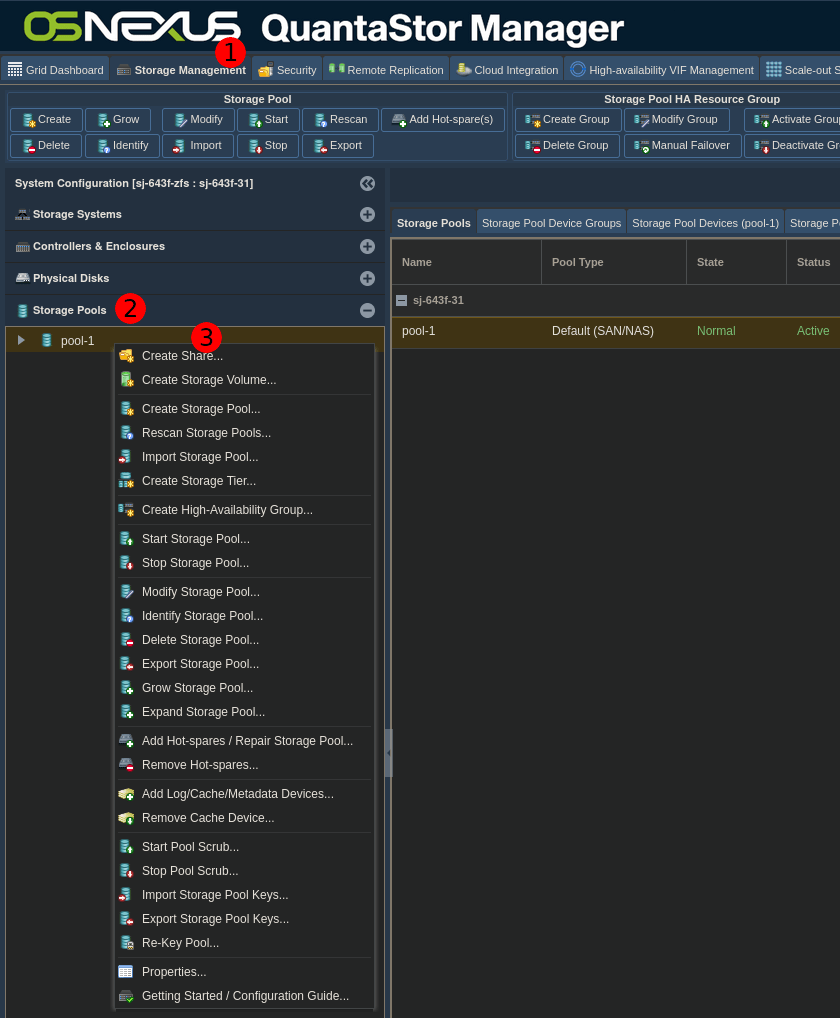

To determine the pool ID to use via the web GUI:

- Click the Storage Management tab (right next to Grid Dashboard) at the top-left of the interface

- In the navigation pane on the left side of the interface, select Storage Pools

- Right-click pool name to reveal the submenu and select Properties

- Note the Internal ID in the dialog box. Mine is fdc99d65-9a2f-e1cf-afcb-f3c961d3be58. Note that unlike the Ceph configuration below, you DO NOT prefix the Internal ID with “qs-” for use in the cinder.conf file.

For now, just copy and paste the Scale-Up Block Storage pool ID somewhere as you’ll need it shortly.

Install the QuantaStor Cinder Driver

Next, you’ll need to add the QuantaStor Cinder driver, provided by OSNexus, to the proper location for Cinder to find it. Connect to the Cinder node you want to install to (SSH) and complete the following:

# First, locate the proper directory to install the driver.

# My install uses /var/lib while others may use /usr/lib.

root@cinder:/# find / -path '*cinder/volume/drivers'

/var/lib/openstack/lib/python3.10/site-packages/cinder/volume/drivers

root@cinder:/# DRVPATH=`find / -path '*cinder/volume/drivers'`

# Now download the driver into that directory

root@cinder-ceph:/# curl \

-o $DRVPATH/QuantaStor.py \

http://qstor-downloads.s3.amazonaws.com/cinder/liberty/QuantaStor.py

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 19820 100 19820 0 0 71324 0 --:--:-- --:--:-- --:--:-- 71552

# Validate the ownership and permissions on the driver.

# I'm running cinder in a docker container, so mine is root.

# Yours might be the cinder user.

root@cinder-ceph:/# ls -l /var/lib/openstack/lib/python3.10/site-packages/cinder/volume/drivers/QuantaStor.py

-rw-r--r-- 1 root root 19820 Mar 21 18:04 /var/lib/openstack/lib/python3.10/site-packages/cinder/volume/drivers/QuantaStor.py

# Check the other files in that dir to see how they're owned and match it up.

root@cinder-ceph:/# ls -fl /var/lib/openstack/lib/python3.10/site-packages/cinder/volume/drivers/Configure cinder.conf

Edit /etc/cinder/cinder.conf to configure the new driver. Use the Scale-Up Block Storage pool ID you found earlier.

[DEFAULT]

...

# volume_name_template needs to start with "cinder-"

# for QS to work properly with Cinder

volume_name_template = cinder-%s

volume_group = cinder-volumes

...

enabled_backends = quantastor

[quantastor]

volume_driver=cinder.volume.drivers.QuantaStor.QuantaStorDriver

volume_backend_name = quantastor

qs_ip=10.0.18.31

# Can be found in the GUI:

# Storage Management -> Storage Pools ->

# Right-click pool name, select Properties.

# Copy Internal ID and prefix it with "qs-".

qs_pool_id=fdc99d65-9a2f-e1cf-afcb-f3c961d3be58

qs_user = admin

qs_password = password

Enable QuantaStor Cinder Driver Logging (optional)

The QuantaStor Cinder driver has the ability to log directly to a separate file. To implement this:

root@cinder:/# touch /var/log/qs_cinder.log

root@cinder:/# chmod 666 /var/log/qs_cinder.log

Restart Cinder Services

Restart the Cinder services to make the changes take effect:

root@cinder:/# systemctl restart openstack-cinder-volume

root@cinder:/# systemctl restart openstack-cinder-api

Verify Driver Is Loaded

Check Cinder logs for errors:

root@cinder:/# journalctl -u openstack-cinder-volume --no-pager | grep -i "error"

# And/Or...

root@cinder:/# less /var/log/qs_cinder.log

# And/Or...

root@cinder:/# cinder get-pools --detail

You should now have Cinder configured to use QuantaStor Scale-Up Block Storage.

Cinder Configuration with Scale-Out Block Storage (Ceph-based)

To connect QuantaStor Scale-Out Block Storage to Cinder we can use Cinder’s built-in Ceph RBD driver. Let’s start with an overview of the pieces required to be implemented:

- Determine the pool ID for the QuantaStor Scale-Up Block Storage pool

- Configure the cinder.conf file

- Provide the Ceph configuration

- Restart services

- Verify the loading of the driver

Determine the Pool ID

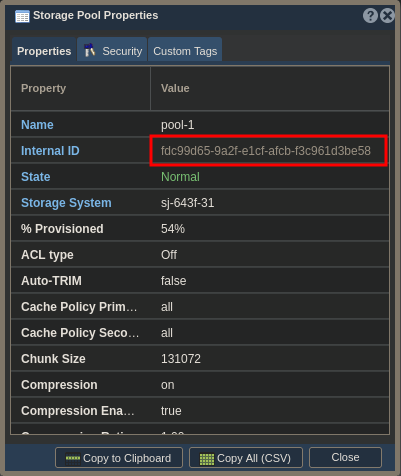

To determine the pool ID to use via the web GUI:

- Click the Storage Management tab (right next to Grid Dashboard) at the top-left of the interface

- In the navigation pane on the left side of the interface, select Storage Pools

- Right-click pool name to reveal the submenu and select Properties

- Note the Internal ID in the dialog box. Mine is 3773ec3f-d36c-bdfc-167b-c3336f366d28. Prefix the Internal ID with “qs-” and you’ve got the value to use in the cinder.conf file.

For now, just copy and paste the Scale-Out Block Storage pool ID somewhere as you’ll need it shortly.

Configure cinder.conf

Edit /etc/cinder/cinder.conf to configure the new driver. Use the Scale-Up Block Storage pool ID you found earlier.

[DEFAULT]

...

# volume_name_template needs to start with "cinder-"

# for QS to work properly

volume_name_template = cinder-%s

volume_group = cinder-volumes

...

# Per https://docs.ceph.com/en/latest/rbd/rbd-openstack/#configuring-cinder

enabled_backends = ceph

glance_api_version = 2

[ceph]

# Options from https://github.com/openstack/cinder/blob/master/cinder/volume/drivers/rbd.py

volume_driver = cinder.volume.drivers.rbd.RBDDriver

volume_backend_name = ceph

# QS ceph cluster name is ALWAYS "ceph".

# The friendly name in the GUI is an internal QS name

# that is not related to the actual ceph cluster name.

rbd_cluster_name = ceph

# rbd_pool needs to be the Internal ID of the pool, not

# the GUI pool name. In the pool properties page you'll

# find the "Internal ID". Prefix it with "qs-" and you're good to go.

rbd_pool = qs-3773ec3f-d36c-bdfc-167b-c3336f366d28

# The ceph.conf file needs to have a newline at the

# end of the file or cinder won't parse it properly.

rbd_ceph_conf = /etc/ceph/ceph.conf

# Created in the GUI:

# Security -> Scale-out File & Block Keyrings ->

# Create Client Keyring

rbd_user = openstack-rbd

rbd_flatten_volume_from_snapshot = false

rbd_max_clone_depth = 5

rbd_store_chunk_size = 4

rados_connect_timeout = -1

Provide Ceph Config to Cinder

The Cinder Ceph driver needs to know some information about the Ceph cluster in order to connect. Specifically, it needs the basic details about the cluster as well as the user credentials required for connection. Luckily, QuantaStor can provide this info for us. On the Cinder node, we’ll create and populate the /etc/ceph directory with the info. You can do this by creating files on your computer and copying them over to the Cinder node, but for illustration purposes here I’m going to copy them from the QuantaStor web GUI and paste them directly into the target file on the Cinder node via my SSH connection. You can use your favorite terminal-based text editor like nano, vi, emacs etc. I’m going to use vi.

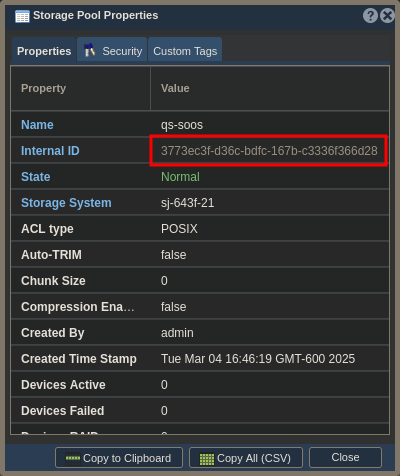

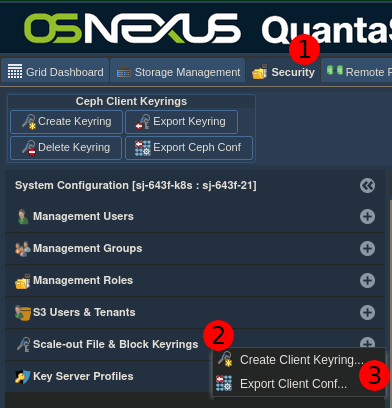

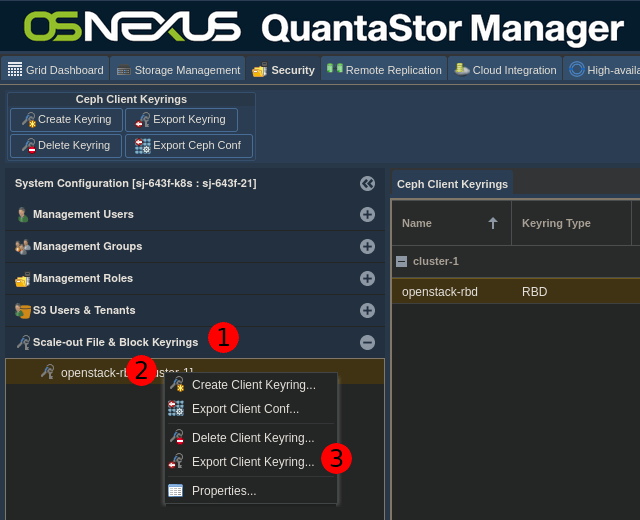

First, we’ll create a Ceph user for Cinder to use for the connection. Starting out in the QuantaStor web GUI, do the following:

- Click the Security tab (right next to Storage Management) at the top-left of the interface

- In the navigation pane on the left side of the interface, right-click Scale-out File & Block Keyrings to reveal the submenu

- Select Create Client Keyring

In the Ceph Client Keyring Create dialog, complete the following:

- Enter a Name for the user account

- Select the cluster (take node of the cluster name for a future step)

- For this specific activity with Cinder, select Ceph RBD

- Check the pool that the user should have access to

- Click OK

Now we’ll get the user data we need to give the Cinder Ceph driver:

- Left-click Scale-out File & Block Keyrings in the navigation pane to reveal the user you just created

- Right-click the user you created

- Select Export Client Keyring

In the Ceph Client Keyring Export dialog, make sure you select the user you just created and then highlight and copy the contents of the Key Block field.

Now go back to the SSH session to your Cinder node and do the following, using the cluster name that you found earlier and the username you just created:

root@cinder:/# mkdir /etc/ceph

root@cinder:/# vi /etc/ceph/{POOLNAME}.client.{USERNAME}.keyring

# Paste in the content you copied and save the file

Here’s my keyring contents:

[client.openstack-rbd]

key = AQDkhMdnCEuFCRAAg3vu8Rcr1SfFCIRLiMPfCw==

caps mgr = "allow profile rbd"

caps mon = "allow profile rbd"

caps osd = "profile rbd pool=qs-3773ec3f-d36c-bdfc-167b-c3336f366d28"

That’s all that’s necessary for the user. Now we need to do something similar with the ceph.conf file:

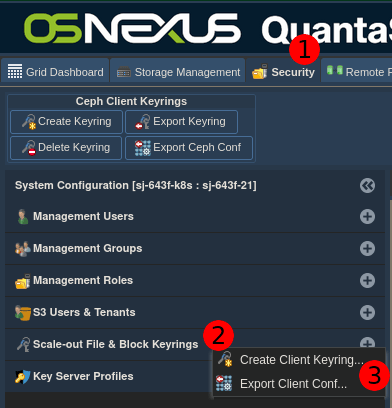

- Click the Security tab (right next to Storage Management) at the top-left of the interface

- In the navigation pane on the left side of the interface, right-click Scale-out File & Block Keyrings to reveal the submenu

- Select Export Client Conf

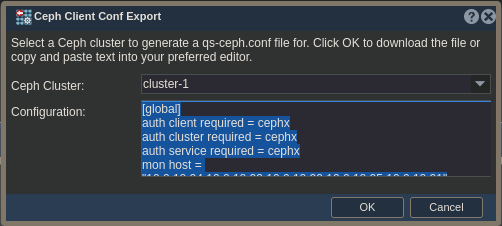

In the Ceph Client Conf Export dialog, select the cluster you’re working with and then highlight and copy the contents of the Configuration field.

Now return again to the SSH session to your Cinder node and do the following:

root@cinder:/# vi /etc/ceph/ceph.conf

# Paste in the copied content BUT DON'T SAVE AND CLOSE YET!!

We need to add one more piece of information to the ceph.conf file before we save and close it. Do the following:

- Click the Scale-out Storage Configuration tab (second-to-last tab) at the top-left of the interface

- In the navigation pane on the left side of the interface, select Data & Journal Devices

- Expand the cluster entry and any one of the nodes

- Right-click any one of the osd entries to reveal the submenu

- Select Properties

- Note the Cluster FSID field in the dialog box. Mine is e19fc559-35b3-610a-0f3f-d3b4d612c6e9. Highlight that value and copy it.

Now, returning again to the SSH session, add a line directly under the “[global]” line and paste the following, using the copied value in place of {FSID}:

fsid = {FSID}

Now you can save and close the file. My completed ceph.conf file looks like this:

[global]

fsid = e19fc559-35b3-610a-0f3f-d3b4d612c6e9

keyring = /etc/ceph/qs-soos.client.openstack-rbd.keyring

auth client required = cephx

auth cluster required = cephx

auth service required = cephx

mon host = "10.0.18.21,10.0.18.24,10.0.18.23,10.0.18.22,10.0.18.25"

And that completes what we need to do to tell the Cinder Ceph driver what it needs to know about connecting to QuantaStor Scale-out Block Storage.

Restart Cinder Services

Restart the Cinder services to make the changes take effect:

root@cinder:/# systemctl restart openstack-cinder-volume

root@cinder:/# systemctl restart openstack-cinder-api

Verify Driver Is Loaded

Check Cinder logs for errors:

root@cinder:/# journalctl -u openstack-cinder-volume --no-pager | grep -i "error"

# And/Or...

root@cinder:/# cinder get-pools --detail

You should now have Cinder configured to use QuantaStor Scale-Out Block Storage.

Final Words

I hope you found this article on configuring OpenStack Cinder to use QuantaStor Scalable Block Storage useful in your OpenStack adventure. OpenStack can be deployed a number of ways, so the way you introduce the configuration and the driver to the Cinder module can vary, but the configuration outlined above should work with any deployment method.

I’d love to hear feedback from your adventure deploying this. Please start a comment thread and let me know the good, the bad and the ugly.

If you have ideas for additional posts that would be valuable to you please don’t hesitate to drop me a line and share them at steve.jordahl at osnexus.com!

Useful Resources

- QuantaStor

- Ceph

- OpenStack

- Cinder RBD Driver Code (Valuable to find driver params)

Leave a comment